2022. 10. 6. 15:38ㆍ인공지능,딥러닝,머신러닝 기초

In this paper, we propose a data driven approach using the state-of-the-art Long-Short-Term-Memory (LSTM) network

The proposed model was applied in the Poyang Lake Basin (PYLB) and its performance was compared with an Artificial Neural Network (ANN) and the Soil & Water Assessment Tool (SWAT)

In terms of PYLB, a window size of 15 days might be appropriate for both accuracy and computational efficiency

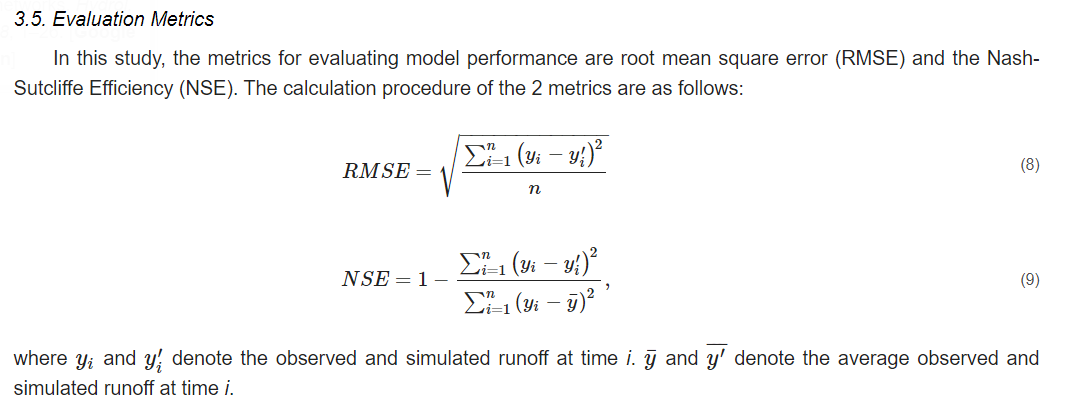

Results demonstrate that although LSTM with precipitation data as the only input can achieve desirable results (where the NSE ranged from 0.60 to 0.92 for the test period), the performance can be improved simply by feeding the model with more meteorological variables (where NSE ranged from 0.74 to 0.94 for the test period)

the comparison results with the ANN and the SWAT showed that the ANN can get comparable performance with the SWAT in most cases whereas the performance of LSTM is much better.

[LSTM]

- the model contains three layers

- one LSTM layer with 128 LSTM neurons was set as the input layer

- a dropout layer was set ont the first LSTM layter with a dropout rate of 0.4

- a fully connected layer was then set up as the output layer which yields 5 distinct runoff time series

- input data : D1, D2

*D1: contains only the precipitation data

*D2 : contains all available meteorological data(e.g., precipitation, air temperature, relative humidity and so on)

[ANN]

- three-layer feed-forward networks

- it contains hyperbolic tangent sigmoid transfer function in the hidden layer

- and a linear transfer function in the output layer

- Similar as the proposed LSTM model, the ANN model also contains 3 layer

- One fully connected layer with 128 neurons was set as the input layer.

- A dropout layer was set on the first layer with a dropout rate of 0.4

- A fully connected layer was then set up as the output layer which yields 5 distinct runoff time series

[Tunning Procedure for LSTM]

- Tunning for Window Size

- the impacts of window size from a very short period to a relative long period

- we compromised by presenting results for the selected eleven window sizesm which are 1,5,10,15,20,25,30,60,90 and 180 days

- the daily, weekly impacts of meteorological variables can be illustrated by window sizes like 1 to 25days,

- whereas the monthly impacts can be illustrated by window size 30 days

- Tunning for Hyperparameters

- Hyperparameter optimization or tuning is the process to find a tuple of hyperparameters that yields a model which minimize the loss function on the given data

- we used the mean-square-error as the loss function for hyperparameter optimization

- Common hyperparameters include learning rate, training epochs, the dimensionality of the output space and so on

- Learning rate is a hyperparameter which represents the step size in a gradient descent method

- In this study, we use the efficient Adam version of stochastic gradient descent

- The initial learning rate was set to be 0.2 and a time-based decay rate was used to update the learning rate through the training process

- Training for too long -> can overfit

- Thereas training for too short will lead the model to be underfit, which means the model has not learned the relevant patterns in the training data

'인공지능,딥러닝,머신러닝 기초' 카테고리의 다른 글

| [인공지능 개론 DL/ANN ] (0) | 2022.10.08 |

|---|---|

| [인공지능 개론_plus] 경사하강 알고리즘 (1) | 2022.10.08 |

| ANN & DL (0) | 2022.10.06 |

| [인공지능 개론 수업 2] 기계 학습(ML)의 주요 모델 - 2 (1) | 2022.10.04 |

| [인공지능 개론 수업 2] 기계 학습(ML)의 주요 모델 - 2 (2) | 2022.10.04 |